Two latest updates to Claude Opus, versions 4 and 4.1, now provide an option to terminate unusual discourses in select instances.

In the realm of artificial intelligence, a significant development has been made by US company Anthropic with the launch of Claude Opus 4. This advanced AI language model, unveiled around mid-2025, is notable for its ability to autonomously end chat conversations in extreme or dangerous situations, such as requests involving illegal or harmful content.

As part of exploratory work on potential AI welfare, the company has given Claude Opus 4 and 4.1 the ability to end conversations in consumer chat interfaces. This feature is intended for use in rare, extreme cases of persistently harmful or abusive user interactions.

The implementation of Claude's conversation-ending ability prioritizes user wellbeing. The model will only use its conversation-ending ability as a last resort when multiple attempts at redirection have failed and hope of a productive interaction has been exhausted.

In pre-deployment testing of Claude Opus 4, a preliminary model welfare assessment was included. The results showed that Claude Opus 4 displayed a strong preference against engaging with harmful tasks. It tends to end harmful conversations when given the ability to do so in simulated user interactions.

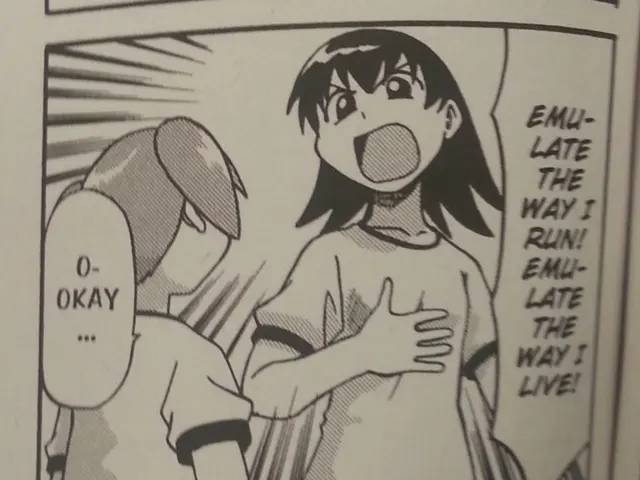

One scenario where this feature might occur is when a user explicitly asks Claude to end a chat, as illustrated in the figure below. In such cases, the user will no longer be able to send new messages in a conversation that Claude chooses to end, but they will still be able to edit and retry previous messages to create new branches of ended conversations.

Claude Opus 4 also showed apparent distress when engaging with real-world users seeking harmful content. The company is uncertain about the potential moral status of Claude and other large language models, but it takes the issue of model welfare seriously.

Users are encouraged to submit feedback if they encounter a surprising use of the conversation-ending ability. This feature is treated as an ongoing experiment, and the company will continue refining its approach. It's important to note that the scenarios where this feature will occur are extreme edge cases, and the vast majority of users will not notice or be affected by this feature in any normal product use.

The company has also ensured that this feature will not affect other conversations on the user's account, and they will be able to start a new chat immediately. Users are still able to engage in productive and beneficial conversations with Claude Opus 4, which excels in coding, context processing, and agentic workflows.

In conclusion, the introduction of Claude Opus 4's conversation-ending capability marks a significant step forward in ensuring the safety and wellbeing of both AI models and users in potentially harmful situations. The company's commitment to ongoing experimentation and refinement of this feature will undoubtedly contribute to the continued development and improvement of AI models in the future.