Dr. Seuss's literature illuminates the method behind speech perception in the human mind

In a groundbreaking study, researchers at the Del Monte Institute for Neuroscience have delved into the intricacies of audiovisual speech perception, with potential implications for investigating neurodevelopmental disorders.

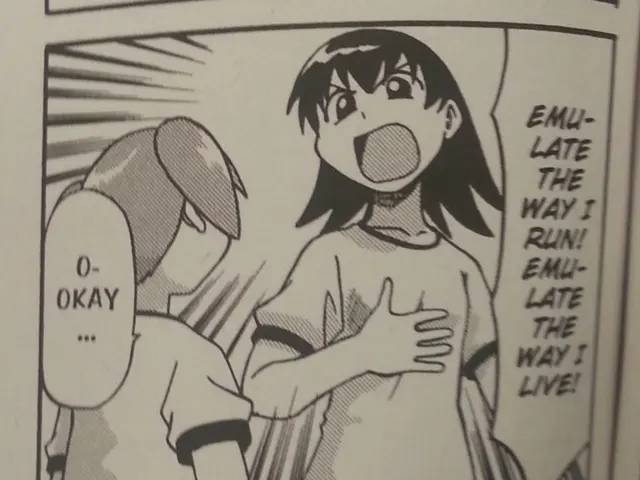

Led by John Foxe, Ph.D., the team conducted their research using functional Magnetic Resonance Imaging (fMRI) to examine brain activity of 53 participants as they watched a video of "The Lorax" being read. The presentation of the story varied randomly in four ways: audio only, visual only, synchronized audiovisual, or unsynchronized audiovisual.

The results revealed that viewing the speaker's facial movements enhanced brain activity in the broader semantic network and extralinguistic regions not typically associated with multisensory integration, such as the amygdala and primary visual cortex. This suggests that multisensory integration plays a crucial role in object detection and identification, as highlighted by Lars Ross, Ph.D., the first author of the study.

Moreover, the researchers found activity in thalamic brain regions, which are early stages at which sensory information from our eyes and ears interact. This finding underscores the importance of these regions in the complex process of audiovisual speech perception.

The study, published in NeuroImage, also uncovered a detailed map of the multisensory speech integration network. This map allows researchers to ask specific questions about multisensory speech in neurodevelopmental disorders like autism and dyslexia.

Sophie Molholm, Ph.D., co-director of the Rose F. Kennedy IDDRC at Einstein, and Victor Bene of Albert Einstein College of Medicine, and John Butler, Ph.D., of Technological University Dublin, are additional co-authors of the study. This research is a collaboration between two Intellectual and Developmental Disability Research Centers (IDDRC), supported by the National Institute of Child Health and Human Development (NICHD).

Researchers such as Dr. Helen Tager-Flusberg and Dr. Kyle Jasmin are involved in expanding knowledge about brain involvement during complex audiovisual speech perception and work with children and adults on the autism spectrum to gain insights into the development of audiovisual speech processing abilities.

The University of Rochester, designated as an IDDRC by the NICHD in 2020, is another research center involved in this collaboration. This network includes regions involved in sensory processing, multisensory integration, and cognitive functions related to story content comprehension.

The findings of this study underscore the potential of understanding the larger network to aid researchers in investigating neurodevelopmental disorders. The research team expresses interest in the multisensory speech integration network as it goes awry in various neurodevelopmental disorders, opening up new avenues for future research and potential treatments.

Read also:

- Peptide YY (PYY): Exploring its Role in Appetite Suppression, Intestinal Health, and Cognitive Links

- Toddler Health: Rotavirus Signs, Origins, and Potential Complications

- Digestive issues and heart discomfort: Root causes and associated health conditions

- House Infernos: Deadly Hazards Surpassing the Flames