Data Preparation for Claude AI Imminent - Learn How to Exclude Your Information Before the Cutoff Date

In a significant move, the company Anthropic, the brains behind the AI model Claude, has announced a new data policy that places the responsibility on users to opt out if they wish to keep their data private. This shift, effective immediately, will impact all plans, including Free, Pro, and Max, as well as Claude Code.

The new policy is part of Anthropic's unique approach to corporate structure. As a Delaware public-benefit corporation, the company is focused on balancing financial interests with a public benefit mission. This includes a "Long-Term Benefit Trust" for responsible AI development, which influences its governance and privacy approaches.

Anthropic's founders and leaders, Dario Amodei (co-founder and CEO) and Daniela Amodei (co-founder and President), have emphasised the importance of safety and ethical standards in the company's policies.

Under the new policy, users' future chats and code may be stored for years instead of weeks for training purposes, if they opt in. However, it's important to note that once data has been used for training, it cannot be retrieved. This means that if a user changes their mind later, their previous data will no longer be accessible.

If a chat is deleted, it will not be used for training. But it's worth noting that only future chats and code will be impacted, not past conversations.

For users who discuss work projects, personal matters, or sensitive information in Claude, the update could be a concern regarding privacy. However, Anthropic assures that it does not sell data to third parties and uses automated filters to scrub sensitive information before training.

The big blue Accept button opts users in by default, while a smaller toggle lets users opt out. New users will see the choice during sign-up, while existing users will get a pop-up called "Updates to Consumer Terms and Policies."

Business, government, education, and API users are not affected by the new policy. If no decision is made by September 28, 2025, access to Claude will be lost.

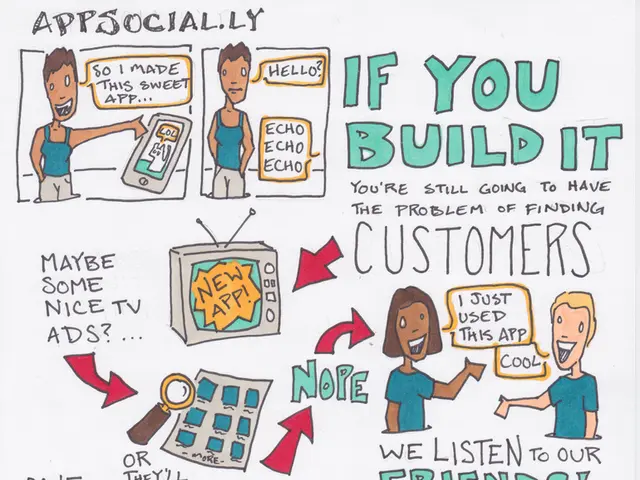

Anthropic states that user data is crucial for improving Claude's performance in both 'vibe' and traditional coding, reasoning, and safety. The decision by Anthropic accelerates the amount of real-world data Claude can train on, potentially improving its performance in complex questions, coding, and safety.

This shift in data policy marks a move from automatic privacy to automatic data sharing unless users opt out. For those who prioritise privacy, it's recommended to carefully consider the implications before making a decision.

Read also:

- Peptide YY (PYY): Exploring its Role in Appetite Suppression, Intestinal Health, and Cognitive Links

- Toddler Health: Rotavirus Signs, Origins, and Potential Complications

- Digestive issues and heart discomfort: Root causes and associated health conditions

- House Infernos: Deadly Hazards Surpassing the Flames